Apache Ozone vs HDFS

Ozone and HDFS are both distributed file systems designed for handling large amounts of data in a distributed environment. However, they have different architectures and use cases.

Introduction

Ozone:

Ozone gives us a superior object storage solution for the latest workloads. Big data workloads are usually very different from regular workloads, and Ozone was made from the lessons learned from running Hadoop in thousands of clusters.

HDFS:

The Hadoop Distributed File System (HDFS) is a system for sharing files that are made to work on standard hardware. HDFS is very forgiving of errors and is made to run on low-cost hardware.

Comparison Ozone vs HDFS

| Ozone | HDFS | |

| Storage | Up to 1000 Nodes and 600TB of Storage | Up to 1000 Nodes and 100TB of Storage |

| Scalable | More than 10 Billion Object | More than 400 Million Object |

| Recovery | Faster < 5 min to start | Slow based on the Size |

| Availability | Active – Active | Active – Standby |

| Protocol Support | Hadoop / S3 | Hadoop API |

Architecture

Ozone:

– Ozone is built with a scale-out architecture and has few operational costs.

– Ozone was made with scalability in mind, and its goal is to handle billions of objects.

– Ozone can be put in the same place as HDFS, and both have the same security and governance policies makes it easy to move or exchange data, and it also makes it easy to move applications.

– Namespace management and block space management are kept separate in Ozone. The Ozone Manager (OM) is in charge of the namespace, and Storage Container Manager is in order of the block space (SCM).

– Ozone’s three important storage parts are volumes, buckets, and keys. Every key is part of a bucket, and each is part of a volume. An administrator can only make a volume. Regular users can create as many buckets as they need, depending on their needs.

– It is where Ozone stores data as keys. Buckets are put in volumes to store them. Users can make as many buckets as they need once a volume has been made.

– Ozone keeps information in the form of keys that live in these buckets.

HDFS:

– HDFS is set up in a master/slave way. An HDFS cluster comprises one NameNode, a controller server that runs the file system namespace and controls how clients can access files.

– The GNU/Linux operating system and the Name Node software are on the hardware that makes up the Name Node.

– The system with the Name Node is the controller server, and it does the following:

• Handles the namespace of the file system.

• It also does things with the file system, like renaming, closing, and opening files and directories.

– There are also several DataNodes, usually one for each node in the cluster. These DataNodes manage the storage connected to the nodes they run on.

– HDFS gives users access to a file system namespace and lets them store data in files. A file is broken up into one or more blocks on the inside, and these blocks are kept in a set of DataNodes.

– The NameNode handles file system namespace operations like opening, closing, and renaming files and directories.

-It also figures out how blocks are linked to DataNodes. The DataNodes are in charge of letting the clients.

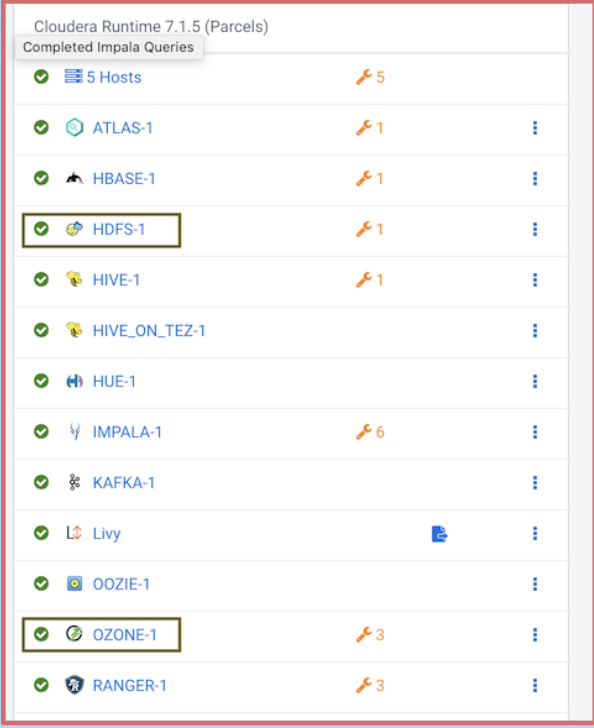

Ozone + HDFS Side by Side

Cloudera Example:

- Ozone and HDFS can co-exist in same cluster

- We can have segregated storage nodes or shared storage nodes with segregated volumes

- It has seamless application integration with HDFS (Same core-site.xml)

Commands

Ozone:

ozone sh vol create user

ozone fs -ls ofs://ozone/

ozone fs -mkdir -p ofs://ozone/user/foo/databases/managed

ozone fs -mkdir -p ofs://ozone/user/foo/databases/external

ozone fs -ls ofs://ozone/user/foo/databases/Using hdfs dfs commands also will work with Ozone.

HDFS

hdfs dfs -ls /

hdfs dfs -mkdir /test

hdfs dfs -rm /test/testing.txtThis command set’s syntax is similar to that of other shells (like bash), so users won’t have trouble picking it up quickly.

Multi Support Protocol

Ozone:

When it comes to filing systems, Hadoop users may rely on OzoneFS. You don’t have to make any adjustments to run applications like Hive, Spark, YARN, or MapReduce on OzoneFS. CLOUD-NATIVE

HDFS:

Hive, Spark, MapReduce, HBase, etc., are some of the many supported applications

File System

Ozone:

All distributed systems can be examined from various angles.

Imagine Ozone Manager as a namespace service built on top of HDDs, a distributed block store, to understand how Ozone can be thought of in this context.

The functional layers can also be used to visualize Ozone; for example, the Ozone Manager and the Storage Container Manager comprise the metadata data management layer.

The data nodes are the foundation of our storage layer, which SCM administers. Ratis’ replication layer is utilized for both consistency purposes and the replication of metadata (both OM and SCM) when data is amended at the data nodes. Recon, our management server communicates with every other Ozone component and offers various existing protocols.

Using Protocol bus, we can only support the S3 protocol. With the help of Protocol Bus, you can create a new file system or object store protocols that connect to the O3 Native protocol.

HDFS:

With HDFS, you can arrange your files in a standard hierarchical structure. Any program or user can create a directory and store files in it.

The file system’s namespace hierarchy is conventional and consistent with other file systems.

Files can be created, deleted, renamed, and moved around in different directories. Currently, HDFS does not have quotas for its users. HDFS does not support either hard links or soft links.

However, these capabilities can be implemented without altering the HDFS design. Regarding the file system’s directory structure, the NameNode is in charge of the namespace.

The NameNode records and stores all unified management API and user interface. By utilizing a protocol bus, Ozone can be expanded in various ways through the use of An application that can specify the maximum number of file replicas HDFS will store.

The factor by which many identical Copies of a file can be created is called its replication factor. This data is stored in the NameNode.

Most Recent Release

Ozone: 1.0.0

HDFS: 3.3.4

Recovery

Ozone:

Ozone is a fully duplicated system that works even if it fails more than once.

HDFS:

One of HDFS’s best features is that it can recover from disasters like cluster-wide power loss without losing any data.

Small File Problem:

Ozone:

Ozone is made so that it can handle tens of billions of files and blocks, and maybe even more in the future. Ozone is a key-value store that can handle both large and small files.

HDFS:

HDFS works best when most files are between tens and hundreds of megabytes. HDFS has trouble with more than 400 million files because it is limited to small files.

Conclusion

Ozone has been created to handle most of the problem faced in the HDFS and it is essential to use the corresponding components based on the Use Case

Good Luck With Your Leaning !!